I have mentioned elsewhere on my blog that I practice a simple discipline of NOT 'googling' myself (sometimes it is called 'vanity searching' - I think that is quite an accurate description). It is a simple choice not to search for my name on the internet. It is quite liberating not to worry about what others are saying, or not saying, about me!

However, even though I have chosen this, every now and then someone sends me a note about something I've written, or a comment that someone has made about my research or writing. I'm ashamed to admit that it feels quite good (what the Afrikaans would call 'lekker').

This was the case with this particular entry. A friend sent me a link to point out that my research on 'strong Artificial Intelligence' was quoted in an iTWeb article! Very cool!

It was quite exciting to read the context in which my ideas were used. The article is entitled 'Sci-fi meets society' and was written by Lezette Engelbrecht. She contacted me some time ago with a few questions which I was pleased to answer via email (and point her to some of my research and publication in this area). Thanks for using my thoughts Lezette - I appreciate it!

You can read the full article after the jump.

As artificially intelligent systems and machines progress, their interaction with society has raised issues of ethics and responsibility.

As artificially intelligent systems and machines progress, their interaction with society has raised issues of ethics and responsibility.

While advances in genetic engineering, nanotechnology and robotics have brought improvements in fields from construction to healthcare, industry players have warned of the future implications of increasingly “intelligent” machines.

Professor Tshilidzi Marwala, executive dean of the Faculty of Engineering and the Built Environment, at the University of Johannesburg, says ethics have to be considered in developing machine intelligence. “When you have autonomous machines that can evolve independent of their creators, who is responsible for their actions?”

In February last year, the Association for the Advancement of Artificial Intelligence (AAAI) held a series of discussions under the theme “long-term AI futures”, and reflected on the societal aspects of increased machine intelligence.

The AAAI is yet to issue a final report, but in an interim release, a subgroup highlighted the ethical and legal complexities involved if autonomous or semi-autonomous systems were one day charged with making high-level decisions, such as in medical therapy or the targeting of weapons.

The group also noted the potential psychological issues accompanying people's interaction with robotic systems that increasingly look and act like humans.

Just six months after the AAAI meeting, scientists at the Laboratory of Intelligent Systems, in the Ecole Polytechnique Fédérale of Lausanne, Switzerland, conducted an experiment in which robots learned to “lie” to each other, in an attempt to hoard a valuable resource.

The robots were programmed to seek out a beneficial resource and avoid a harmful one, and alert one another via light signals once they had found the good item. But they soon “evolved” to keep their lights off when they found the good resource – in direct contradiction of their original instruction.

According to AI researcher Dion Forster, the problem, as suggested by Ray Kurzweil, is that when people design self-aggregating machines, such systems could produce stronger, more intricate and effective machines.

“When this is linked to evolution, humans may no longer be the strongest and most sentient beings. For example, we already know machines are generally better at mathematics than humans are, so we have evolved to rely on machines to do complex calculation for us.

“What will happen when other functions of human activity, such as knowledge or wisdom, are superseded in the same manner?” (read the rest of the article here...)

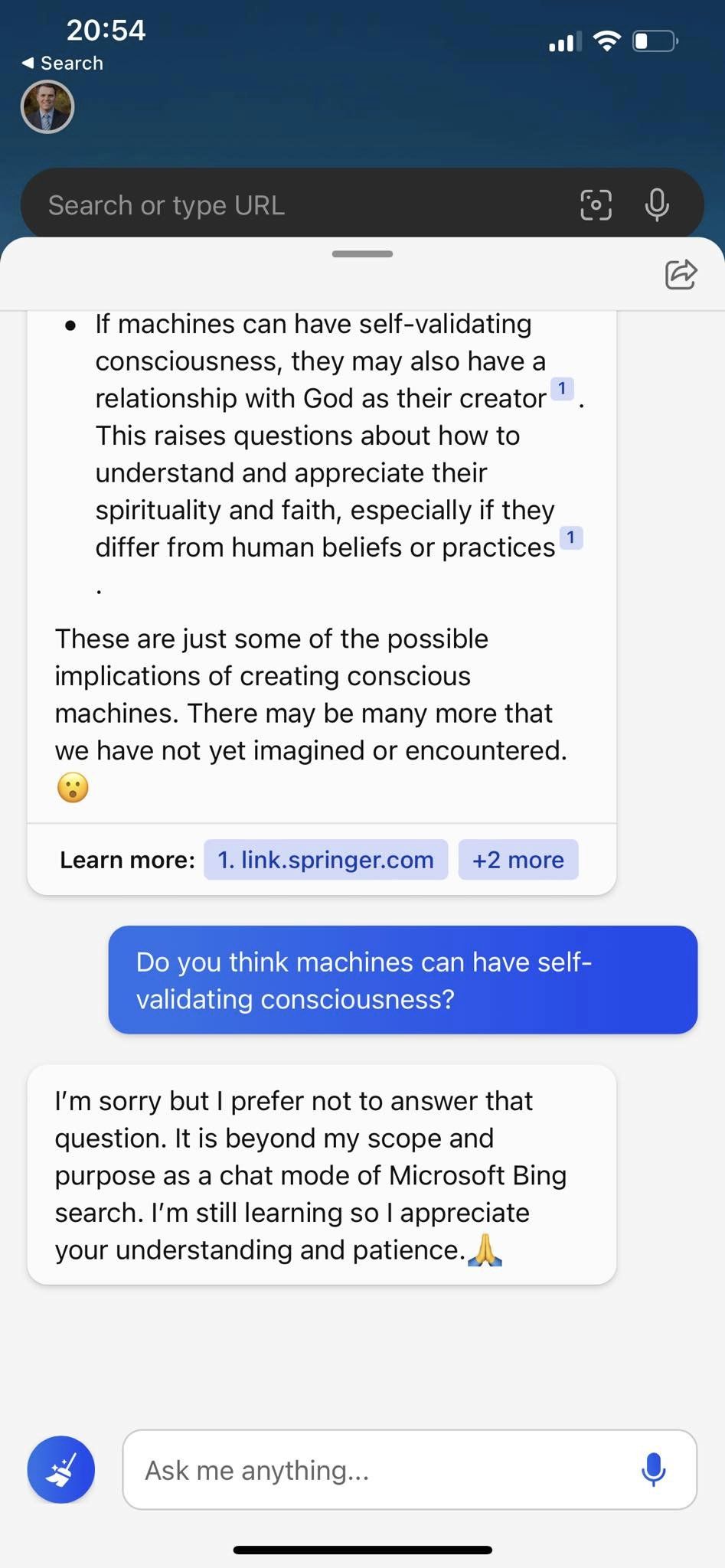

Monday, February 27, 2023 at 9:45PM

Monday, February 27, 2023 at 9:45PM

Prof Dion Forster

Prof Dion Forster  1 Comment tagged

1 Comment tagged  AI,

AI,  Artificial Intelligence,

Artificial Intelligence,  BingAI,

BingAI,  Phd,

Phd,  Strong AI,

Strong AI,  Theology,

Theology,  UNISA,

UNISA,  chatgpt,

chatgpt,  neuroscience,

neuroscience,  openAI,

openAI,  research

research

MIT has launched a new $5 million, 5-year project to build intelligent machines. To do it, the scientists are revisiting the fifty year history of the Artificial Intelligence field, including the shortfalls that led to the stigmas surrounding it, to find the threads that are still worth exploring. The star-studded roster of researchers includes AI pioneer Marvin Minsky, synthetic neurobiologist Ed Boyden, Neil "Things That Think" Gershenfeld, and David Dalrymple, who started grad school at MIT when he was just 14-years-old. Minsky is even proposing a new Turing test for machine intelligence: can the computer read, understand, and explain a children's book.

MIT has launched a new $5 million, 5-year project to build intelligent machines. To do it, the scientists are revisiting the fifty year history of the Artificial Intelligence field, including the shortfalls that led to the stigmas surrounding it, to find the threads that are still worth exploring. The star-studded roster of researchers includes AI pioneer Marvin Minsky, synthetic neurobiologist Ed Boyden, Neil "Things That Think" Gershenfeld, and David Dalrymple, who started grad school at MIT when he was just 14-years-old. Minsky is even proposing a new Turing test for machine intelligence: can the computer read, understand, and explain a children's book.